Researcher says AI needs Human Rights

Sign Up For The Newsletter

〰️

Sign Up For The Newsletter 〰️

Make it stand out

“Human Rights are more universal and more defined than ethics principles,” said Lindsey Andersen, lead author of the study.

As of late, activists, tech researchers, and columnists have voiced deep concern about the rapid, seemingly unchecked, advancement of artificial intelligence. Tech researchers, as well as individuals at tech companies, have petitioned for AI creators to implement a code of ethics. Implementing a code of ethics could add accountability, they argue.

But, Lindsey Andersen, a graduate student at Woodrow Wilson School of Public & International Affairs and lead author of the study, said, implementing a code of ethics isn’t enough. What AI needs, Andersen said, is Human Rights.

“Human Rights are more universal and more defined than ethics principles,” explained Andersen during her approximately 15-minute presentation outlining a study she wrote with Access Now, a global organization that “defends and extends the digital rights of users at risk around the world,” according to its website.

“There is an entire system of regional, international, and domestic institutions and organizations that provide well-developed frameworks for remedy and articulate the application of human rights law to changing circumstances, including technological developments,” Anderson explained in the study. “And in cases where domestic law is lacking, the moral legitimacy of human rights carries significant normative power.”

Andersen, a former Fulbright research fellow, spoke about the goal and conclusions of the study at Open Gov Hub, a nonprofit in Northwest Washington, D.C., Nov. 8. A panel discussion was held after the presentation.

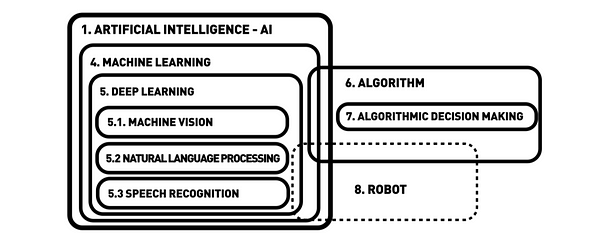

The relationships of the concepts in artificial intelligence (accessnow.org)

The push to add Human Rights to the discussion of AI comes in the midst of researchers and activists speaking out about Facebook, Amazon, and Google's lack of transparency and rapid data collection. Big and small tech companies collect huge amounts of personal data to create personalized experiences on social media platforms, including personalized advertisements, and to track your location.

“Researchers have developed a machine learning model that can accurately estimate a person’s age, gender, occupation, and marital status just from their cell phone location data alone and in the future, we think these risks will only be compounded as we move toward an increased use of IoT devices,” said Andersen.

Andersen spoke about how AI is used in almost every sphere of society — criminal justice and the healthcare system to name a few. She also talked about how federal and local governments and the private sector use AI, saying that both need to implement measures that ensure fair use and transparency. The study recommends that government has a “higher standard for the public sector regarding the use of AI.”

“States bear the primary duty to promote, protect, respect, and fulfill human rights under international law, and must not engage in or support practices that violate rights, whether in designing or implementing AI systems,” wrote Andersen in the study. “They are required to protect people against human rights abuses, as well as to take positive action to facilitate the enjoyment of rights.”

The private sector, according to the study, should “identify both direct and indirect harm as well as emotional, social, environmental, or other non-financial harm,” and should “consult with relevant stakeholders in an inclusive manner, particularly any affected groups, human rights organizations, and independent human rights and AI experts.”

Referencing the study, Andersen spoke repeatedly about bias algorithms and police officers use them to predict crime and to aid judges to use them in sentencing.

“Any machine that is going to use AI will have bias data,” said Andersen.

Showing how Human Rights may apply to combat injustice in the criminal justice system, the study references the International Covenant on Civil Rights and Political Rights, article 14:

“All persons shall be equal before the courts and tribunals. In the determination of any criminal charge against him, or of his rights and obligations in a suit at law, everyone shall be entitled to a fair and public hearing by a competent, independent and impartial tribunal established by law […] Everyone charged with a criminal offense shall have the right to be presumed innocent until proven guilty according to law.”

Andersen acknowledged during her presentation that implementing Human Rights to hold AI accountable isn’t a new idea. Other researchers at Data & Society and the Berkman Klein Center for Internet and Society at Harvard University have offered similar suggestions.

Baltimore, Maryland -- While at the Maryland Lynching Memorial Project’s 8th annual conference, two of the Maryland Lynching Truth and Reconciliation Commission’s commissioners showed attendees, in the form of a slide show, a list of recommendations for a statewide policy to help remedy and repair communities injured by lynchings and racial discrimination.